Web cache introduction

A Web cache is a system for optimizing the Web page loads. It is implemented both client-side (browser) and server-side (Content Delivery Network (CDN)/Web server).

Web cache optimization brings several benefits:

- On the user side, the web page is displayed faster; very useful during mobile browsing. :racehorse:

- On the server side, the load is lower, and with the cloud model of pay-as-you-go, this results in cost reductions. :moneybag:

- And you reduce your carbon footprint by eliminating many unnecessary requests processed by browsers and servers. :seedling:

The context of my project

CyberGordon website static resources (images, css, js, json) are hosted on a AWS S3 Bucket behind AWS CloudFront (CDN). You can have a presentation of the architecture on my specific blog post.

The HTTPS queries of these static resources represent half of the overall query volume: an average user browsing (home page –> create an analysis –> view results) represents 9 static and 8 dynamic queries. The latter requests are used to create the analysis and retrieve the results as well as the overall CyberGordon statistics displayed on the homepage.

So I started working in March 2021 based on a AWS post to optimize the cache of these static resources as much as possible and I got good results and a lower bill! :ok_hand:

How I optimized the cache

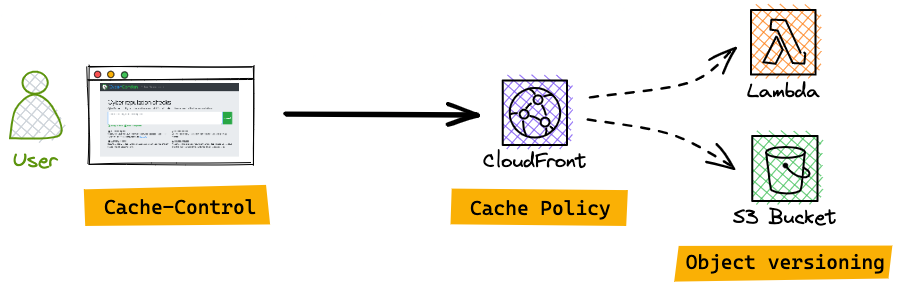

To achieve my goal, I used 3 features:

- Cache-Control header: add a cache directive on static resources sent to users to be respected by the web browser.

- CloudFront cache policies: refine the CDN to be able to manage the default cache and reset it if needed.

- File versioning: long-term caching of resources that almost never change and replacing them with new ones quickly.

Production & automation requirements

It is essential to have a strategy allowing to have the hand on the cache in case of change of object (security fix, dependency upgrade, new logo, etc) to reset it on all the chain so that the user get the new object when he returns on the Web site.

I have decided to have a maximum delay of 30 minutes in case an item needs to be changed.

To avoid manual tasks, I also tried to automate all repetitive tasks.

User cache - Cache-Control header

The Cache-Control HTTP header is useful to give instructions on the cache of the object received by a Web browser or an intermediary service (proxy, CDN, …). In a simplified way, this header received by the web browser indicates if the object can be cached and for how long.

I want visitors to cache as much of the object as possible for one or two visits, so I set a 30 minute cache on the client side for web pages.

I added Cache-Control header on the following S3 objects:

- html -

max-age=1800–> 30 min cache - js, css, svg, jpg, png, gif, pdf, json (except for statistic data), txt, xml -

max-age=31536000, immutable–> 1 year cache

To automate the addition of this header during deployment, I updated my CyberGordon Terraform configuration to automatically add a specific Cache-Control header based on the file extension.

CDN cache - CloudFront cache policies

CloudFront is extremely powerful but when you want to push its settings further, it becomes quite complex. I won’t do a detailed presentation, as that would be a whole post, but you can learn more about it in the documentation.

A Cache Policy allows you to specify the criteria (URL path, query string, headers, etc) to store an object in the CDN cache. In addition to the cache, we can enable compression of resources in transit to reduce the volume transferred between the CDN and the Web browser.

I have applied 3 Cache Policies on CloudFront:

Default (*)–> All static resources: 30 days cache with Gzip + Brotli compressionsassets/json/stats_*–> Statistic updated every 30 min, so 30 min cache with Gzip + Brotli compressionsrequest/*, get-request/*, r/*, contact-message–> Dynamic requests, so no cache

These Cache Policies are easily managed trough Terraform.

Static resources cache - File versioning

Cache file versioning aka Cache busting is a very simple but very effective way to force a client to retrieve the new version of an updated resource by adding the ‘version’ of the resource to the file name.

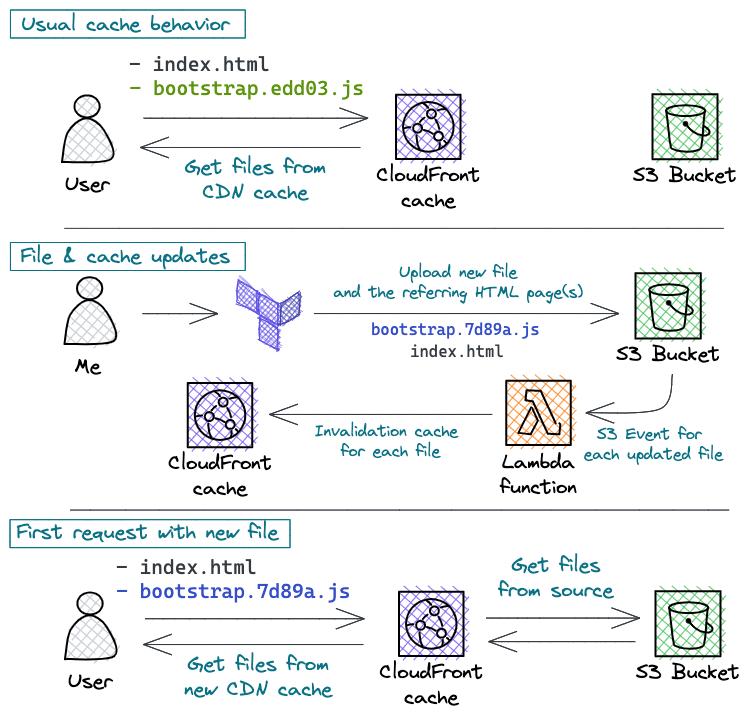

The example below shows a real case of the Bootstrap JavaScript file used on the CyberGordon website.

- A portion of the file hash (SHA-256) is added to the file name in order to have a unique name for each Bootstrap version upgrade.

- In the code of the HTML page, the unique name of the Bootstrap file is used. The web page (index.html) keeps its file name for life and is only cached for 30 minutes. Therefore, if a static resource is updated, only its reference in the HTML page is changed with a new hash, and the client will have loaded the new version within 30 minutes.

This method works for every static file type: I use it for all JavaScript, CSS, images (like the logo shown as an example highlighted in gray in the image above) and also JSON file for changelog.

Here again, I have automated with Terraform the creation of the file name including the hash as well as the insertion of this unique name in the HTML pages that reference it. The code is horrible to read: a mixture of Join, Regex and Substr functions… but its works!

What happens if I want to push a new resource?

CloudFront is our intermediate cache that we can act on directly to flush the cache and push updated resources to the clients.

This is what happens when I have to update a resource:

- Normally a new client retrieves static resources (e.g. Bootstrap 4.6) from the CloudFront cache and then caches them on his web browser (or even on the enterprise proxy).

- When updating a resource (Bootstrap 4.7), I push the new file via Terraform which changes its filename with the hash and the HTML pages using it. Then the unitary CloudFront cache of these pushed files is cleared.

- Consequently, the next time the client visits (at least 30 minutes later), his browser will retrieve the HTML web page and thus the new referenced resource (Bootstrap 4.7).

CyberGordon cache strategy

With these 3 techniques and update processes in place, I have summarized here the principles and values of caching.

The cache strategy follows these 3 principles:

- The client-side cache should not exceed 30 minutes for Web pages (HTML). After this time, the client must retrieve the latest version of the HTML files from the server.

- The client keeps all static resources (img, js, css, …) unmodified and versioned in the cache for up to one year.

- If an resource is modified, it must be possible to reset the CDN cache so that the client can retrieve the latest version after its browser-side cache has expired (so in less than 30 min).

This table summarizes the current cache strategy on CyberGordon:

| Object | Versioning | CloudFront cache | Browser cache |

|---|---|---|---|

| HTML + sitemap/robots | No | 1 month | 30 min |

| Images | Yes | 1 month | 1 year |

| CSS | Yes | 1 month | 1 year |

| JavaScript | Yes | 1 month | 1 year |

| JSON Changelog + Engine lists | Yes | 1 month | 1 year |

| JSON Statistic (Updated) | No | 30 min | 30 min |

Conclusion & results

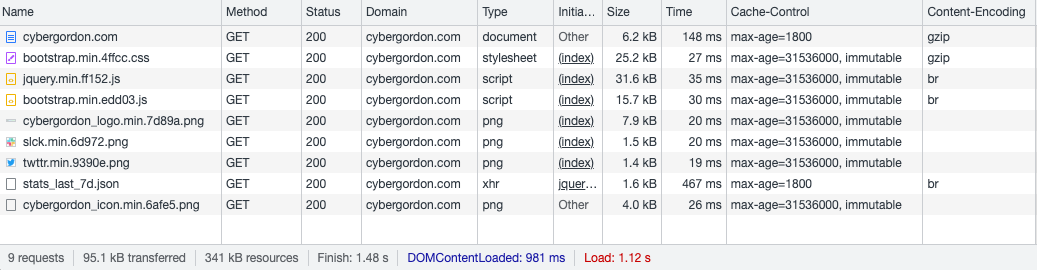

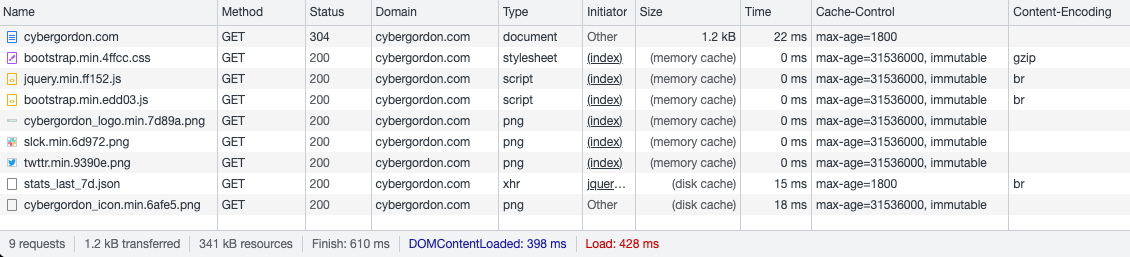

The implementation of these techniques was not an easy task and many tests were carried out, but the result is relevant since it went into production in June 2021:

- 90% fewer HTTPS requests and 99% less volume (GB) transferred after the first visit.

- The cache hit rate of static resources jumped from 0 to 86% and the volume in GB transferred to the server (origin) decreased by almost 40%.

The pictures below show the result without cache and then with cache.

Moreover, in addition to the implementation of the cache, the compression of static resources during the network transfer and the file source reduces the amount of data transferred. Images can be compressed easily with online tools without loss of visual quality. Compressing the resources allowed me to reduce the overall size of the resources by 13%.

Finally I was able to automate the whole implementation with the powerful functions of Terraform. Without Terraform, the work at each resource modification will be very hard…

:wave: