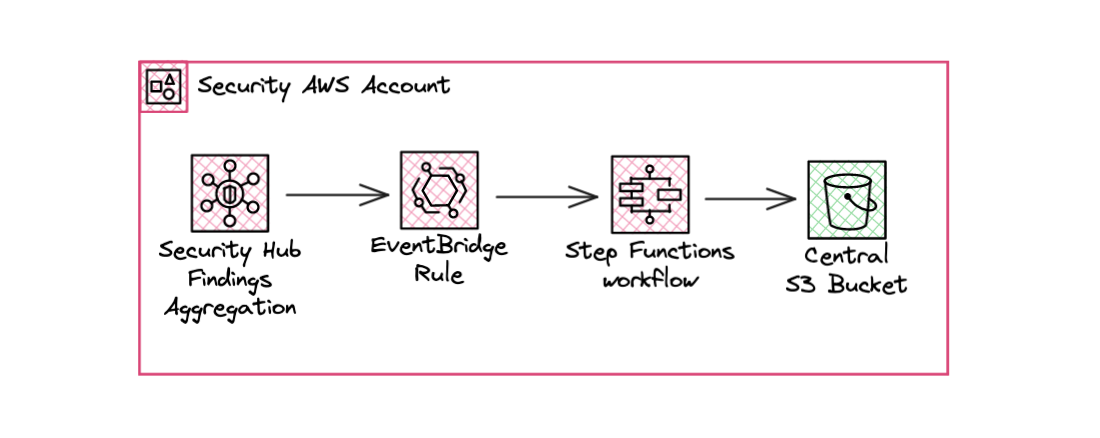

AWS Security Hub allows security teams to centralize most of the findings from AWS services but does not provide a native feature to exporting them in a S3 bucket, like CloudTrail or GuardDuty do. I’m surprised by this lack of native export.

AWS already shares several solutions to export them in a CSV file on-demand, on-schedule or the whole finding history one-shot. But in the end, I had to find a solution to export them in near real-time to an S3 bucket so that they could be integrated into a third-party SIEM solution.

Recommendations

- If you have multiple accounts in a AWS Organization, you should set a delegated Administrator account for Security Hub. By doing this, you have have a regional aggregated view of findings from all accounts.

- Then, create a cross-region aggregation to centralize in one view the findings of all regions and accounts.

- Setup the following solution in the delegated administrator account and in the same region where the aggregation region is set.

Managed export With Step Functions

Thanks to the simplicity and power of the AWS orchestration service, Step Functions, I was able to export them easily and quickly (in less than 400ms) with a dynamic tree structure based on the finding metadata.

Because Security Hub generates one EventBridge event for each individual finding, we can basically copy/paste the event’s detail and put it on the bucket. Note that the Security Hub aggregation region pushes all findings into the EventBridge bus.

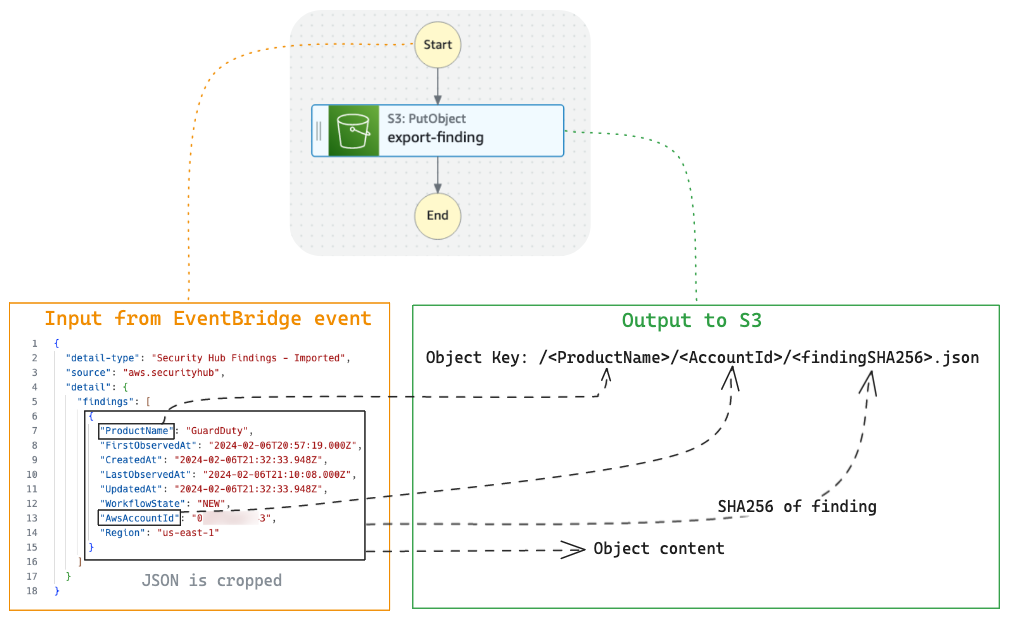

I stored the findings on the S3 bucket to parse them according to a security product or the context. To create a dynamic tree, I played with Step Functions’ intrinsic functions to archive the findings according to the source service that generated the finding and by AWS Account where the event occurred.

The diagram above provides a schematic explanation of the content of my Step Functions workflow. The workflow definition follows the diagram.

# Step Functions state machine definition

{

"Comment": "Export all Security Hub findings to S3",

"StartAt": "PutObject",

"States": {

"PutObject": {

"Type": "Task",

"Parameters": {

"Body.$": "$",

"Bucket": "<export-bucket-name>",

"Key.$": "States.Format('{}/{}/{}.json', $.ProductName, $.AwsAccountId, States.Hash($, 'SHA-256'))"

},

"Resource": "arn:aws:states:::aws-sdk:s3:putObject",

"InputPath": "$.detail.findings[0]",

"End": true

}

}

}

At the export-finding step, I’ve specified that the input must be a sub-part of the entire EventBridge event (InputPath = $.detail.findings[0]), to make it easier to configure later:

- The content of the object is referenced simply with

$, means the root of the filtered event. - The object path is generated from three inputs: the Source service (

$.ProductName), the AWS account ($.AwsAccountId) and the object name is the SHA256 hash of the finding content (States.Hash($, 'SHA-256')). I usedStates.Formatto concatenate them.

Initially, I wanted to use the Security Hub Finding ID as the object name, but it can be very long (the does not enforce a length constraint) and contain special characters that could be rejected by the S3 API when the object is created.

I therefore used a hash of the finding for the object name to avoid any blocking. The important thing, in my case, was to be able to integrate a third-party security tool while being able to filter on criteria such as the source service that detected a particular problem.

Finally, with the hash as object name, this ensures complete traceability by archiving every change to the same finding, such as new occurrences or status changes.

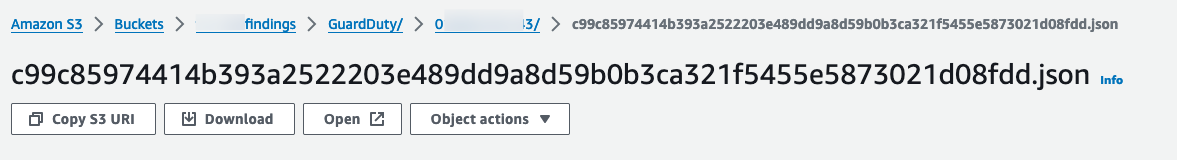

At the end we have this example of an object with a simple and effective tree structure:

EventBridge & S3 setup

If you want to reproduce my example, here’s the pattern to be used by the EventBridge rule, which will then execute your Step Function workflow. To perform this last action, EventBridge must be authorised via an IAM policy to start your workflow. So don’t forget to attach this ’execution role’ to your EventBridge target.

# EventBridge Rule event pattern

{

"source": ["aws.securityhub"],

"detail-type": ["Security Hub Findings - Imported"]

}

Finally, don’t forget to add a lifecycle rule to your s3 bucket to avoid a hefty bill in the long term; even if the volume in GB remains low for JSON exports (in my test, an average of 8KB per finding).

When will AWS offer this native integration?

Before I discovered Step Functions, I had built this solution using a Lambda Function invoked by EventBridge. This meant creating code and maintaining it (upgrade runtimes, etc.). With Step Functions, this eliminates the maintenance part. However, I’m waiting for AWS to offer this solution directly integrated with Security Hub.